One of the signs marking evolution of the scene is the use of new technologies. Every time you notice that you have to upgrade your computer in order to watch the latest demos, it means that the scene has advanced to a new stage. And as of today, the new advancement are the shaders.

This article is not an in-depth analysis of shaders, neither a tutorial. Rather it describes what you need to do in order to get those shaders running, including all the quirks of the vertex shaders.

What are those shaders?

The shaders are another step in the evolution of the graphics hardware. I won't go into details of the shaders history, but as you may expect they were first introduced into high-end rendering software and hardware, to finally come to the consumer hardware.

A shader is a short program that works on a single piece of data. Typically, shaders are executed in hardware by superscalar vector processors, carefully designed to carry out only that single task.

Currently, in the graphics pipeline we have only two types of shaders: vertex shaders and pixel shaders. A vertex shader is a program that processes a single vertex. It receives input data (such as vertex position in world space, vertex normal, lights positions or vectors, etc.) and it computes output data (such as vertex position in screen space, texture coordinates, final lighting color, etc.). A vertex shader is executed once for every vertex of a model it is assigned to, and it does not produce any new vertices. As you can easily figure out, the vertex shaders completely replace the TnL units, which are now known as the fixed-function vertex processing.

A pixel shader is a program that processes a single pixel. It receives input data (such as texture coordinates, textures, colors calculated from lighting, etc.) and it computes output data (such as pixel color, depth value, etc.).

The APIs

On the Windows platform, we have two major 3D APIs: DirectX Graphics, a.k.a. Direct3D, and OpenGL. The Direct3D is one of the very few among Microsoft's APIs that has evolved losing compatibility with the previous versions. Ideally, if you install the latest version of Direct3D, you automatically get all the previous versions. But code-wise the API is not backwards compatible. This is a big advantage, because new technologies often require changes in the API, so hybrid versions with partial compatibility are ideologically a big programming problem - one of the examples is the Win32 API.

OpenGL, on the other hand, is a very clean and simple graphics API. It is cleaner and simpler to use, even than the latest version of Direct3D. It was designed from scratch as a graphics API. Unfortunately, on Windows its implementation is not perfect - Microsoft prefers to support its own API. An advantage of OpenGL is that it is portable, because unlike Direct3D, it is available on other software and hardware platforms. But a disadvantage is that all the latest technologies are supported only as an extension, not as a part of standard.

Fortunately, concerning shaders the things are not so much different on the two APIs. If you use the NVidia shader compiler, you can run your shader code on both Direct3D and OpenGL. As of writing this, the OpenGL 2.0 standard is going to be released, so things are going to get better for OpenGL.

In this article I focus only on how to handle shaders in Direct3D 8.x. The Direct3D is a more difficult API, so it is not a problem to get them running in OpenGL afterwards. The Direct3D 9.0 mainly introduces new shader versions, and I will cover that, too. Another reason for writing about Direct3D 8.x instead of 9.0 is that it is the default version you have installed with Windows XP - many users still didn't get the 9.0 installed.

The Graphics Pipeline

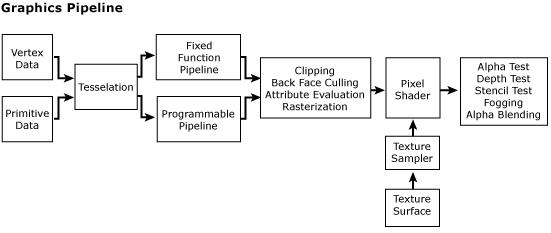

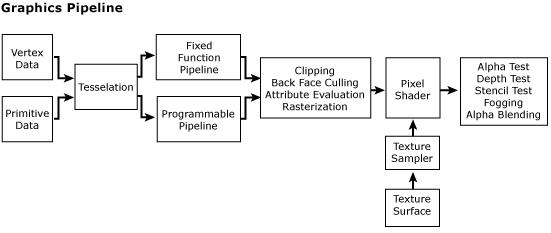

What good would be an article about shaders if it didn't show where the shaders fit in the rendering pipeline? The following diagram was ripped directly from our favorite source - MSDN:

As you can see in the above diagram, the vertex shaders, indicated as the programmable pipeline, entirely replace the fixed-function (TnL) pipeline. The triangle rasterization is performed by pixel shaders, which replace the original TSS shaders (TSS stands for texture stage states).

Pixel Shaders

The pixel shaders are much simpler than the vertex shaders at the moment, so I will describe them first.

As mentioned above, a pixel shader processes pixels. It has input registers, which contain input data - interpolated output from vertex shaders, output registers, which contain output data (pixel color and depth) and temporary registers.

There are several versions of pixel shaders. Each version adds something new. The versions 1.0-1.3 are very similar to each other. The version 1.4 introduced by ATI in DirectX 8.1 has some new capabilities and is a step towards the version 2.0, which has a lot of new functionality and is available in DirectX 9.0.

The following table shows how many registers of each type there are available in each shader version:

| Register | Type | 1.1 | 1.3 | 1.4 | 2_0 | 2_x |

| t# | Texture (in) | 4 | 4 | 6 | 8 | 8 |

| s# | Sampler (in) | - | - | - | 16 | 16 |

| v# | Color (in) | 2 | 2 | 2 | 2 | 2 |

| c# | Constant float (in) | 8 | 8 | 8 | 32 | 224 |

| i# | Constant int (in) | - | - | - | - | 16 |

| b# | Constant bool (in) | - | - | - | - | 16 |

| r# | Temporary | 2 | 2 | 6 | 12 | 32 |

| p | Predicate | - | - | - | - | 1 |

| r0/oC# | Color (out) | 1 | 1 | 1 | 4 | 4 |

| oDepth | Depth (out) | - | - | - | 1 | 1 |

As you can see, the shaders evolve with each version. Instruction types, counts, how many instructions of each type can be executed and how many registers of each type are accepted by each instruction - all this information is available in the primary source of your knowledge about shaders, that is, the MSDN.

I listed above only the important versions, for which you will like to develop your shaders. Version 1.0 is supported by all hardware with pixel shaders, version 1.2 isn't supported alone and version 3.0 isn't supported by any hardware at the moment (but since there is such definition as 3.0, we can easily guess that some vendor is already working on it, be it ATI or NVidia).

If a hardware supports one version of shaders, all lower versions are automatically supported - it's backwards compatible. Let me list the popular graphics hardware, that supports particular versions of pixel shaders.

| 1.1 | NVidia GeForce3 |

| 1.3 | NVidia GeForce4 (except GeForce4 MX), Matrox Parhelia |

| 1.4 | ATI Radeon 8500, 9000+ |

| 2_0 | ATI Radeon 9500+ |

| 2_x | NVidia GeForceFX |

I don't know much about cards of other vendors, but I know that some of them do support pixel shaders.

There are only minor differences between pixel shaders 1.0-1.3. Basically they mean that the lower versions can accept less registers of some type in a single instruction, etc.

The major difference between pixel shader 1.4 and 1.3 is that the 1.4 decouples texture sampling from texture coordinates. Texture coordinates tell where the texture should be sampled, but they can also be used to indicate vertex color, position or normal, or any other thing - this way per-pixel lighting such as real Phong or bump mapping can be done. The difference between texture sampling and texture coordinates becomes more obvious in pixel shader 2.0, where you can have upto 16 textures (16 sampler registers) and only 8 texture coordinate sets (8 texture registers) to address these textures.

Another difference between 1.4 and 1.3 is that the version 1.4 introduces two phases. A pixel shader can consist of two phases, which doubles the number of allowed instructions per shader (from 8 to 16). Such two-phase pixel shaders are simply executed twice, first the first phase and then the second phase, so these are like passes.

The pixel shader 2.0 is a major step in shader evolution. It introduces many additional instructions known from the vertex shaders, such as rcp or rsq as well as other new instructions, such as abs or sincos.

The pixel shader 2.0 extended, a.k.a. 2_x, supported by NVidia GeForceFX, introduces flow control instructions, so you can perform branches, subroutine calls and loops. The pixel shader assembly language gets so complex, that you want to move to HLSL (High Level Shading Language from DirectX 9) or Cg (NVidia's C for graphics) in order to easily write and maintain your code.

Pixel Shader Examples

Writing pixel shaders is simple. However, before you begin to write a shader for

your engine, first ask yourself this question:

- Do I really need a shader to do that?

Indeed, many of the common rendering techniques don't require pixel shaders. The TSSes handle typical texture blends and adds very well. However, the advantage of pixel shaders over the TSS shaders is their great flexibility. TSSes are usually poorly and unclearly supported, because they were vaguely defined by Microsoft, so except the standard TSS setups, all vendors support TSSes the way they want. Pixel shaders support all possible combinations of textures, colors and constants, without any limitation, provided that you stick to the strict rules defined in MSDN.

Nevertheless you should ask yourself that question and stick to the TSS shaders whenever possible, because still most of hardware doesn't support pixel shaders, and unlike vertex shaders they are not emulated. Pixel shaders cannot be emulated in software, because they are executed on a per-pixel basis, which means a pixel shader is processed at least 480,000 times to fill a 800x600 frame, and often it is much more.

A DirectX 8 pixel shader consists of four parts:

- pixel shader version definition,

- constants definitions,

- texture sampling,

- color processing.

Let's examine the following simple shader:

ps.1.0 ; we use only version 1.0

def c0, 0.1, 0.1, 0.1, 0 ; emissive (c0) = [0.1, 0.1, 0.1, 0]

tex t0 ; we use texture and coords from stage 0

mad r0, t0, v0, c0 ; output (r0) = tex0 * diffuse + emissive

The above shader, executed in exactly one arithmetic instruction, performs a modulation of the texture sampled at t0 with the diffuse color, and adds some small emissive color.

You can easily deduce that:

- a pixel shader version 1.0 is created,

- a constant is defined in the code (there are no immediate constants, so the

constant registers must be used instead),

- the texture from stage 0 is sampled using texture coordinates from stage 0

and the sampled color is put in register t0,

- the diffuse color from lighting is contained in the v0 input register,

- the resulting color that is output from the shader is returned in the

temporary register r0.

If you need the specular color, it is coming in in register v1.

The above shader can be done using the TSS shaders and two stages, like this:

ColorOp[0] = MultiplyAdd;

ColorArg1[0] = Texture;

ColorArg2[0] = Current;

ColorArg0[0] = TFactor;

AlphaOp[0] = Modulate;

AlphaArg1[0] = Texture;

AlphaArg2[0] = Current;

TextureFactor = 0x00191919;

There are yet two or three other ways to achieve the same thing using TSS shaders.

So where are the pixel shaders useful? Well, in many many other situations. For example, when you want to add three or more textures premultiplying them by several different factors.

You can now sit down and create your own pixel shaders. It's simple! You can use the MFCPixelShader tool supplied with the DirectX 8 SDK (but unfortunately non existent in the DirectX 9 SDK).

As you have seen, you can define constants directly in your code. When you assemble a shader into an object code, these constants are included in the code. However you can still define constants at runtime using IDirect3DDevice8::SetPixelShaderConstant() function - it's as simple as that! You can adjust a constant from frame to frame and achieve some nice animations.

However, there are many things that can't be done in pixel shaders. These things are done in...

Vertex Shaders

As mentioned above, a vertex shader is a program that processes single vertices.

A vertex shader replaces the conventional fixed-function TnL pipeline.

A vertex shader has to perform the following tasks:

- transform vertices from object space to screen space,

- taking into account all lights and object material, compute diffuse and

specular colors at the vertex,

- compute or just copy texture coordinates,

- calculate fog density for per-vertex fog,

- calculate point size for points.

The above tasks carried out by vertex shaders extend to achieve many other

effects, including:

- vertex blending (skinning),

- vertex tweening (morphing),

- distorting object geometry,

- preparing data for pixel or TSS shaders, e.g. for per-pixel lighting, light mapping such as anisotropic lighting, bump mapping, etc.,

- texture coordinates transormation and generation,

- preparing texture coordinates for procedural textures,

- and many, many more.

Hence, the vertex shaders are a real workhorse, and they steer the pixel shaders, which are usually only a supplement for doing per-pixel effects. The advantage of the vertex shaders over the fixed-function pipeline is that you can do anything you want with them (in the possible bounds, of course). A disadvantage is that you have to do everything yourself: you have to take care of all processing you want to have included, such as vertex blending, all lighting parameters, etc. Another disadvantage is that you have to write several shaders to achieve the same thing in different scenes. This is because the number of lights a vertex shaders processes is constant. This changes with the 2_x vertex shaders, where you have the flow control instructions, so you can write vertex shaders for a variable number of lights.

Unlike the pixel shaders, the vertex shaders are emulated on hardware that doesn't support them. They are emulated properly and as fast as possible, taking into account available instruction set (3DNow!, SSE, SSE2, etc.). When you write simple shaders, they are not much slower than the shaders done in hardware. If your graphics hardware doesn't have even hardware TnL, the vertex shaders you write can be actually faster than the fixed-function pipeline, because they lack all the branches needed to detect how many lights and what features are needed during processing of vertices.

In DirectX 8 there are only two versions of vertex shaders: 1.0 and 1.1. There is no graphics hardware that supports only 1.0 shaders, so you can rely on the 1.1 shaders (which differ only by having an additional address register). All hardware with pixel shaders supports vertex shaders. In addition, the NVidia GeForce4 MX supports vertex shaders 1.1, but still it lacks the pixel shaders.

The vertex shaders 2.0 are supported by ATI Radeon 9500+ and 2.0 extended (2_x) by NVidia GeForce FX. If a graphics hardware supports one version of vertex shaders, it also supports all lower versions (as with pixel shaders). Analogically to pixel shaders, vertex shaders 2.0 introduce new, additional instructions, and vertex shaders 2_x introduce flow control instructions. With each version the number of registers rises:

| Register | Type | 1.1 | 2_0 | 2_x |

| v# | Input (in) | 16 | 16 | 16 |

| c# | Constant float (in) | 96 | 256 | 256 |

| i# | Constant int (in) | - | 16 | 16 |

| b# | Constant bool (in) | - | 16 | 16 |

| aL | Loop Count (in) | - | 1 | 1 |

| a0 | Address | 1 | 1 | 1 |

| r# | Temporary | 12 | 12 | 12 |

| p0 | Predicate | - | - | 1 |

| oPos | Position (out) | 1 | 1 | 1 |

| oD# | Color (out) | 2 | 2 | 2 |

| oT# | Texture Coord (out) | 8 | 8 | 8 |

| oFog | Fog (out) | 1 | 1 | 1 |

| oPts | Point Size (out) | 1 | 1 | 1 |

As you can see, the new registers in new vertex shader versions accompany new features and new instructions. The minimum number of constant register has increased in version 2.0. Actually, ATI hardware, such as ATI Radeon 8500, already supports 192 constants, even if it has only vertex shader 1.1.

Vertex Shader Examples

A vertex shader begins with vertex shader version declaration (same as pixel shader). Next the optional constant definitions can follow, after which the actual vertex shader code begins.

But setting up a vertex shader is more complex than that. In order to successfuly

create and use a vertex shader, you have to:

1) Create vertex declaration that binds stream data into input registers.

2) Compile and set the vertex shader with the vertex declaration.

3) Bind data streams.

4) Set indices if you want to draw indexed primitives.

5) And now you can do the actual drawing of your primitives.

The procedure of setting up the geometry is somewhat complex and I am not going into the details. The only thing that I can say is that it can be very difficult to set non-standard set of data for the vertex shaders in Direct3D 8. In Direct3D 9 this has been fixed and gained the required flexibility.

So the main thing you have to do before using a vertex shader is to bind stream data to vertex shader input registers creating a so-called vertex declaration. A vertex declaration is a special bit code that you have to define in your program. It is not so easy, but it can also be done with a script, outside of your app.

The tricky part with DirectX 8 is the use of constant definitions. Of course it is not a problem to define constants from your app using Direct3DDevice8::SetVertexShaderConstant() function. But if you use the def instruction inside of your vertex shader code, the vertex shader assembler does not include these defines in the object code, rather it spits them out as a vertex shader declaration set, which you have to bind to the vertex shader declaration you use to create your vertex shader. Fortunately, this very uncomfortable obstacle has been removed in Direct3D 9.

So lets write a couple of basic vertex shaders to show what they basically can do.

vs.1.0 ; we use only version 1.0

m4x4 oPos, v0, c0 ; transform vertex position

This vertex shader does nothing but transforms vertex position from object space

to screen space. The c0,c1,c2,c3 constants are supposed to contain a full

transform matrix (World*View*Proj), which should be transposed, because of the

way the vertex shader processes the matrix. The v0 register contains a vertex position,

but this must be indicated in a vertex shader declaration:

const DWORD vtx_decl[] = {

D3DVSD_STREAM( 0 ),

D3DVSD_REG( D3DVSDE_POSITION, D3DVSDT_FLOAT3 ),

D3DVSD_END(),

};

where the D3DVSDE_POSITION constant evaluates to 0 (v0).

Let's try to calculate diffuse lighting for a vertex and also output texture coordinates for stage 0. The vertex declaration would be:

const DWORD vtx_decl[] = {

D3DVSD_STREAM( 0 ),

D3DVSD_REG( D3DVSDE_POSITION, D3DVSDT_FLOAT3 ),

D3DVSD_REG( D3DVSDE_NORMAL, D3DVSDT_FLOAT3 ),

D3DVSD_REG( D3DVSDE_TEXCOORD0, D3DVSDT_FLOAT2 ),

D3DVSD_END(),

};

This vertex declaration is equivalent to:

D3DFVF_XYZ | D3DFVF_NORMAL | D3DFVF_TEX1

And the vertex shader is:

vs.1.0

m4x4 oPos, v0, c0 ; transform vertex position

m3x4 r0, v3, c4 ; transform normal to world space

; Normalize normal

dp3 r0.w, r0, r0 ; r0.w = dot_product( r0.xyz, r0.xyz )

rsq r0.w, r0.w ; r0.w = 1 / sq root( r0.w ) = 1/length

mul r0, r0, r0.w ; r0 = normalized( r0 )

; Calculate diffuse

dp3 oD0, r0, c8 ; diffuse = dot_product( normal, light )

; Copy texture coordinates

mov oT0, v7

The above simple vertex shader handles a typical FVF vertex and one directional light. As you can see, all the data, such as transformation martrices, materials, lights, etc. have to be set through constants. The vertex shader obtains vertex normal in v3 and texture coords from vertex in v7 (this is defined in the vertex declaration). It also obtains a world matrix in c4,c5,c6,c7 constant registers. If the matrix would contain a non-uniform scaling, it would have to be a transpose of inverse world matrix. We also assume that the constant register c8 contains the direction vector of a directional light. (All these constants are set by the app in run time).

The latest versions of vertex shaders got so complex, that programming them in assembly may get difficult (and I'm not talking about hardcore coders like me here ;-) ). A new language has been created - the HLSL. It is included in DirectX 9, but you can also write shaders in it using ATI's RenderMonkey. There is also the Cg from NVidia, which is portable between DirectX and OpenGL. The OpenGL 2.0 is also going to include a similar language. All these high level shading languages are very, very useful if you want to create a cleaner and modular code. You can for example reuse your lighting routines in different lighting scenarios, i.e. depending on the number and types of lights in your scenes, and without branching.

Conclusion

I encourage you to experiment with the shaders, even if you are not going to use them in your next production. I also warn you that this technology evolves very quickly and it's far from being in a final state.

The pixel shaders are typically more restrictive, and support less instructions per-shader. This is due to the low processing power of the current pixel shader hardware, because there are usually 100x more pixels than vertices processed. This ratio can be even larger for simplier geometric models.

However we see the evolution in the pixel shaders towards the same thing as vertex shaders. The pixel shaders slowly gain the precision of the vertex shaders (at first they handled like only 12-bit precision) and both have a lot in common. Soon the hardware will implement hundreds of identical vector processors, which will probably work asynchronously inside, and both pixels and vertices will be processed by them, so that the workload will be equally balanced between the processors.

In a couple of years either the vertex shaders will vanish, or a third kind of surface shaders will appear, that together with ray traversal units will form realtime raytracers that will replace traditional rasterization hardware.

Linx

http://msdn.microsoft.com/

The MSDN online - if for some reason you don't have your local copy or if you don't have the DX SDK.

http://www.gamedev.net/reference/list.asp?categoryid=24#129

Four nice tutorials concerning vertex and pixel shaders.

http://tomsdxfaq.blogspot.com/

A general Direct3D FAQ that contains many useful hints, not found anywhere else.

http://d3dcaps.chris.dragan.name/

This is my Direct3D CAPS database. If you're developing an engine and want to check

what capabilities are supported by particular cards - this database is for you!